Digital identity has spent years getting sharper at one job: proving you are the same person each time you show up online. That work matters, but it is no longer the whole problem. Today, the most persuasive “you” on the internet might not be you signing in. It might be your face in a clip, your voice in an audio note, or your mannerisms in a video call that never happened. When those signals can be copied and remixed at scale, identity stops being just an access question and becomes a media question.

That is why Denmark’s “Own Your Face” plan feels like more than a headline. It is an attempt to treat a person’s likeness as something that should be controllable in the same way an account is controllable. In other words, it points to a missing layer in the digital identity stack: a way to connect people to the media that claims to represent them, without forcing every viewer to become a forensic expert.

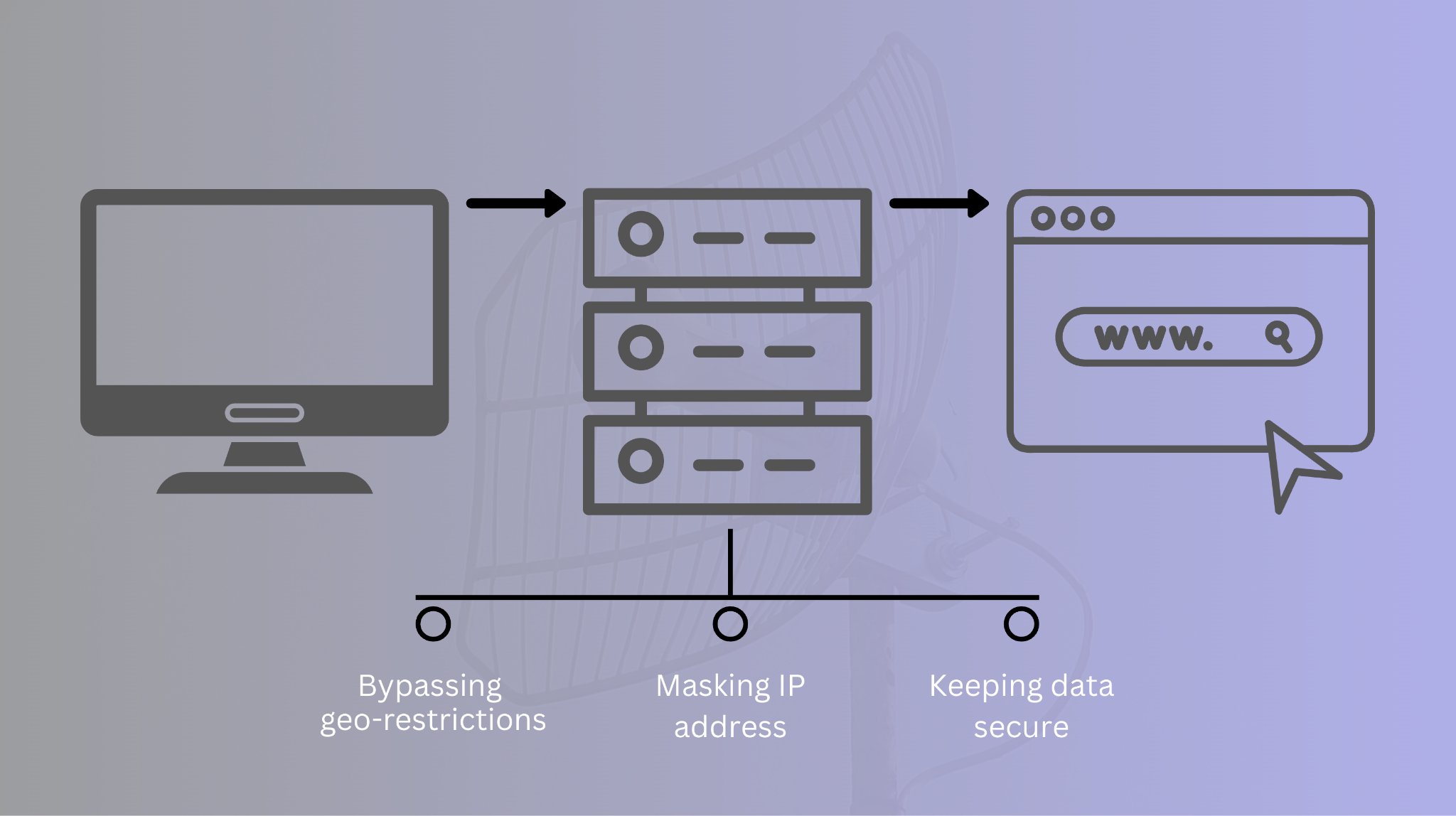

The Middle Layer That Helps Manage How Web Traffic Flows

A proxy server is easiest to understand as a controlled “in between” point. Instead of your device talking directly to a destination site, the request goes to a middle service that forwards it on, then returns the response. That sounds simple, but it creates a powerful place to shape how traffic moves.

In a forward setup, the proxy server represents the user side. Organizations use it to route outbound requests through a shared gateway, which can standardize security rules, manage authentication, and reduce direct exposure of internal systems. In a reverse setup, the proxy sits in front of a service and represents the service side, helping manage inbound traffic with load balancing, caching, and request filtering. Either way, the point is not “hiding” for its own sake. The point is control, consistency, and a cleaner boundary between systems.

This is where proxies become more than just a small tech detail. Different kinds of proxies are good at different things: some focus on speed, some on steady, reliable routes, and some on very detailed access control (who can do what).

The image was created by us, specifically, for this article.

Many companies use proxy providers that give them lots of IP addresses, different countries or cities to choose from, and rules for how often addresses change. This helps them test how their apps work in real-life conditions.

A safe proxy is one that:

- Always handles data in a clear, predictable way,

- Uses strong logins (authentication),

- And doesn’t secretly change or mess with the traffic.

Good proxy providers explain how they work very clearly, because this “middle layer” is only helpful if you can trust what it does.

The connection to Denmark’s “Own Your Face” theme is structural. Digital identity already has ways to prove “who is asking.” What it lacks is a widely used middle layer that helps manage “what is being shown” when a person’s face or voice is involved. The proxy model is a reminder that adding a well-designed middle layer can change a messy, end-to-end problem into something systems can manage reliably.

When Identity Proof Stops at the Login Screen

If identity is only checked at the moment of access, then everything that happens after a login is treated as “someone’s content.” That assumption breaks down when realistic media can be generated, edited, and reposted faster than people can verify it. The result is a provenance gap: we can often verify who is using an account, but not whether a specific image, clip, or audio snippet has a trustworthy history.

A few recent data points show why this gap is so hard to ignore:

|

Signal |

Recent data point |

Why it matters for digital identity |

|---|---|---|

|

Public understanding of synthetic media |

50% of U.S. adults said they were not sure what a deepfake is (2023) |

If many people cannot name the tactic, they cannot reliably judge it in the moment |

|

Scale of what moves online |

Videos comprise about 80% of internet traffic |

The highest-impact identity impersonations often ride on video and audio |

|

Expert attention to trust breakdown |

Over 900 experts were surveyed for the Global Risks Report 2025 |

Trust risks are being treated as mainstream infrastructure problems, not edge cases |

The point is not that everyone must become an expert in media detection. It is that identity systems need a parallel track for media integrity: where a piece of content came from, what changed, and what signals can be checked without guessing. That is the “missing layer” Denmark’s framing brings to the surface.

What “Owning Your Face” Looks Like in Practice

A workable version of “own your face” is less about a single rule and more about making authenticity portable. The most promising direction is to attach machine-checkable history to content at creation time, then preserve it as the content moves. The Coalition for Content Provenance and Authenticity describes this idea as recording provenance facts about a piece of digital content in a tamper-evident way, designed for global, opt-in adoption.

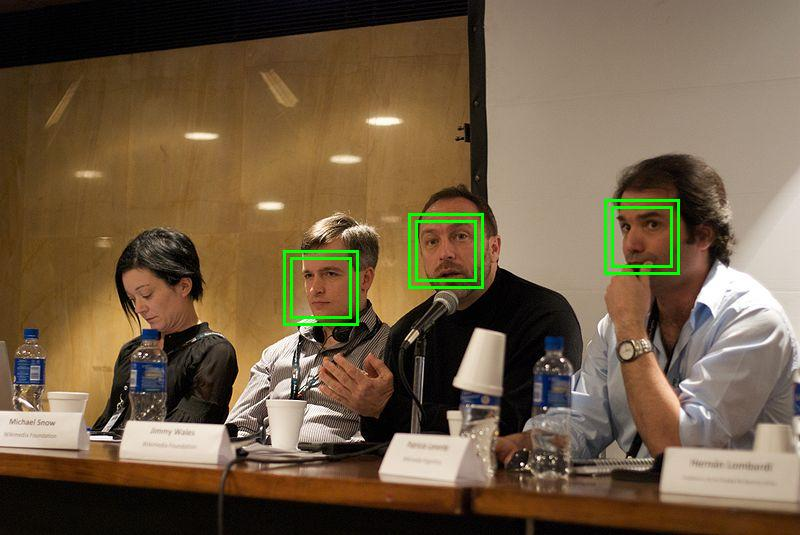

Face scanning and detection is becoming increasingly popular in different industries, hence countries like Denmark put efforts into protecting individuals. Image: Here

This helps because it shifts verification from vibes to evidence. Instead of asking viewers to decide whether a clip “looks real,” a system can answer narrower questions: Was this captured by a known device or tool? Was it edited, and if so, how? Is the metadata intact, or was it stripped? Those are identity questions, just applied to the media.

It is worth noting that none of this is magic. Provenance signals can be missing, and honest content will sometimes lack them. But wide adoption changes the baseline. The Content Credentials initiative, for example, says the effort has grown to a collaboration involving 500+ companies, which suggests real momentum toward common signals that can travel with content.

How Content History Becomes Part of Your ID

That is where the Denmark conversation becomes useful even outside Denmark. It pushes digital identity to cover not only “who logged in,” but also “what represents me.” And it highlights a simple design goal: give people a clear, standard way to attach consent and authenticity to their likeness, so trust does not depend on guesswork. As the World Economic Forum put it, “Misinformation and disinformation remain top short-term risks for the second consecutive year.”